Mastering LLM Agents: The Tester’s Essential Guide

Large Language Models (LLMs) such as OpenAI’s GPT and Meta’s LLaMa genuinely are the biggest technical revolution since the invention of the internet. ChatGPT is the fastest growing product ever created, as it reached 100M active users in just two months. And it is not just hype. A study found that chatGPT’s usage leads to noticeable improvements regarding writing tasks. With a 40% drop in task duration coupled with an 18% quality improvement. The same goes for coding tasks with assistants like Copilot or Codeium that can help turn hours of laborious coding into a 10-minute formality.

Introduction

LLM‘s extensive capabilities have reignited the debate surrounding AGI (Artificial General Intelligence). The primary driver in reinvigorating this debate is LLM Agents: they are like LLMs on steroids. An LLM agent can perform complex tasks by devising a plan of action by breaking the task down into smaller, simpler subtasks. The agent has tools at its disposal that it can summon with parameters. It can adjust its course of action depending on the tool’s output. Agents can thus react to their environment, allowing them to recover from mistakes. Agents can browse the internet to book hotel rooms or search real estate agencies for properties meeting specific criteria. They can write information into files, create issues in Jira, etc.

Now, there is no doubt that these agents will also have a big impact by assisting in software testing-related tasks. We anticipate significant growth in LLM Agents for software testing in the foreseeable future due to two main reasons:

- The increasing capabilities of LLMs make it possible to address complex software testing tasks. For example the design, optimization, and correction of test cases, test scripts, and test code;

- LLM-based AI agents may use data from the project. For example, test execution results, the User Stories to be covered, technical information about the application, and actual usage patterns. This makes the agent’s results highly relevant.

Future

But we are at the beginning of this technological shift, as many challenges remain to be solved, depending on the complexity of the targeted testing task.

Generative AI and Large Language Models

Generative AI refers to a class of algorithms capable of creating new, unseen content based on user input. Users may provide a textual description for an image they would like to obtain, and the model produces it. Or it can be the other way around: the user asks the model to describe an image using natural language. There are a variety of models depending on the type of input and expected output. Some models even have multi-modal capabilities as they accept various types of input and similarly can produce various types of output: text, images, sound, etc.

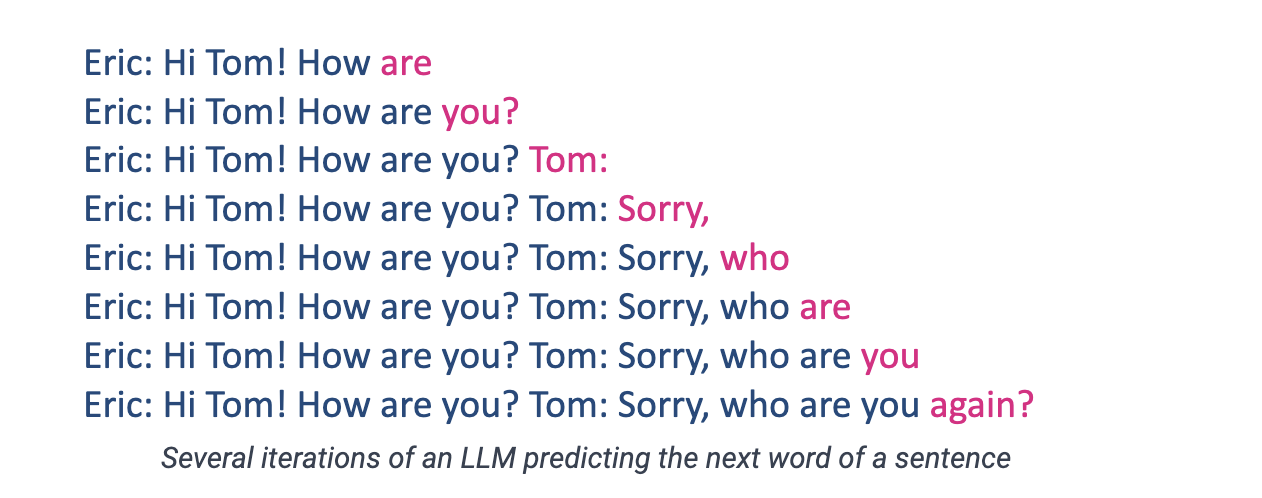

LLMs, as their name suggests, are generative AI algorithms that specialize in text-based data. (footnote: GPT-4 is called a LLM albeit having multi-modal capabilities). Essentially, they predict the next word in existing text, whether it’s a few words or many paragraphs. That’s it! This process is called inference. Applying this process multiple times is the first step. Each time, they append the predicted word to the initial text, and this is how they generate complete paragraphs.

These models have been trained on gigantic amounts of curated data: entire book libraries, internet data, etc. Coupled with a large architecture (remember, they’re called Large Language Models) that allows them to detect semantic connections among words that could be far apart within a text. Typically, an LLM has a context window: the maximum text length within which it can establish word connections. Beyond this context window, it is as if the model had no prior exposure to the text. GPT-3 has a context window of approximately 3,000 words, while GPT-4’s is 25,000 words. Claude 2, another LLM made by Anthropic, has a 75,000 words context window, which is the length of the first Harry Potter book!

Bridging the Gap Between Text Understanding and Complex Tasks

As it turns out, LLMs are so good at identifying meaning and making work semantic connections that they are able to harness the intelligence and logic that we humans have put into our writings to perform complex tasks with unprecedented success rates. Concretely speaking, LLMs can perform traditional NLP tasks such as text summarization, sentiment analysis, information retrieval, text translation, and more, even without specific training. This is where the real value of LLMs lies, and it is why it has sparked the largest craze ever from the AI community and beyond, the possibilities are endless.

For example, GPT-4 was able to pass the bar exam as well as many other academic exams, beating most of the applicants in the process. LLMs also demonstrate high programming skills and can generate executable code from textual instructions, and suggest real-time fixes for bugs, vulnerabilities, or code quality issues. They can explain error messages, provide smart code completion, and so on. Several companies have developed coding assistants that directly integrate with popular IDEs: Github Copilot, Amazon CodeWhisperer, Codeium, TabNine, etc.

What about software testing? LLMs understand both text and code, so they can have an impact in many areas. They can summarize bug reports, and name a test case based on its content (text or code). From a program, they can generate a unit test suite. Give them a product specification and they can help in deriving functional test cases. However, there are more complicated tasks that may involve several iterations or may require interacting with the SUT or some test tool. Remember, LLMs are only able to produce the next word, so they will not be able to perform those tasks directly.

LLM Agents: Building Intelligence and Autonomy

LLMs have shown great ability to generate remarkably human-like text and engage in conversations. However, as impressive as they are, they have some limitations. First, they can sometimes “hallucinate” information that isn’t accurate. It means they can make mistakes, which might be challenging to identify if the model’s output isn’t easily verifiable. Second, their knowledge is static – frozen at the time they were trained. GPT-3 and 4 have been trained on data prior to 2022. If, for instance, you need help using a library that was released in 2023, chatGPT will certainly be unable to assist you.

Finally, LLMs can’t look up information on the fly like humans can. This is where LLM Agents come in. LLM Agents are programs designed to interact with the outside world in real-time. While the LLM generates text, the agent can call APIs, search the internet, or access databases to find up-to-date, factual information. But what is under the hood?

An LLM Agent’s goal is to perform a high-level task by interacting with its environment. The agent receives its task, and given the current state of its environment, “performs” an action. The environment changes and the agent is informed of the change. This information, along with the plan it initially devised, allows the agent to decide which action to perform next. This exchange persists until the agent considers it is done. All this involves a framework composed of three modules: the brain, the prompt, and the controller. The brain is of course the LLM, capable of devising a plan of action and deciding what tool to use next. The two other modules are detailed below.

About the prompt

What makes agents possible from LLMs is the fact that such models are highly sensitive to the nature of the prompt (the input query) they are being fed. Research indicates that appending “Let’s proceed step by step” to a prompt improves the LLM’s outcomes. This addition prompts the LLM to adopt a step-by-step approach, breaking down the problem into stages and aiding in reaching the correct answer. Researchers actively engage in prompt engineering, a practice that constitutes its own research area.

Turning an LLM into an agent requires a meticulously crafted prompt. The prompt establishes the context in which the LLM is called. Specifically, the prompt outlines the LLM’s role (such as a software testing engineer) and guides its approach to addressing the given problems (as demonstrated in an agent prompt). It strongly incites the LLM to come up with a plan of action. Moreover, the prompt lists the tools available to the LLM, each tool having a distinctive name along with its potential parameters, and the LLM’s past actions. Finally, It specifies the response format of the LLM (for instance, JSON) to be able to parse it.

Astonishingly, the LLM is capable of understanding and following all these constraints and guidelines, to act as an agent capable of interacting with its environment for the completion of complicated tasks.

The controller

The controller is the crucial module that allows the LLM agent to take action in the real world. It acts as the interface between the LLM brain and the external environment. It has many key responsibilities:

- Sending prompts to the LLM and receiving its responses

- Parsing the LLM’s output to extract the actions it wants to take

- Executing those actions by calling APIs, web services, etc.

- Collecting the results and outputs of those actions

- Feeding those results back into the prompt to inform the LLM

- Storing each action the LLM takes in a database for tracking

- Injecting relevant past actions back into the prompt for context

The controller allows the LLM to affect real-world environments, while also giving it the context and grounding in past actions that it needs to decide what steps to take next. Essentially, the controller translates the LLM’s linguistic abilities into practical capabilities. With the controller handling the intricacies of interacting with the outside world, the LLM solely focuses on planning and reasoning to accomplish complex tasks. The two modules combine to create a system that can perceive, reason, and take intelligent actions.

Conclusion

The future will show that LLM agents can support various software testing tasks, facilitating the daily work of testers, test managers, and test automation engineers. At Smartesting, we see LLM Agents as an extraordinary opportunity to better assist testers, test managers, and test automation engineers in multiple tasks: end-to-end test generation, automated repair of broken test code, test data suggestion, assistance in creating BBD scripts, and many other exciting topics. We also know that LLM agents are not the answer to every subject and that classic Machine Learning algorithms (e.g. clustering to classify test reports) and Deep Learning algorithms (e.g. auto-encoders to detect anomalies in logs) also provide answers for testing and quality engineering. That’s the full range of AI technologies we’re exploring at Smartesting to continually strengthen our Gravity and Yest products.